"prefix": "myco-prod-enriched/company/acme", START RequestId: be79dfe2-c3c5-11e5-8dce-6ddde2fa925d Version: $LATEST This log is presented when a transaction exceeds 50 seconds. Is there something we've simply missed?.Is there any way to give the loader more time to process? Will this just become an issue down the line when the number records per batch increases?.Should we be loading fewer/more, larger/smaller sets of files? This would not seem to fix the timeout problem given our experience with Redshift.Is there something we could/should do to configure our Redshift cluster to load more quickly?.The timeout error also "blocks" that table (the timestamps in this example are from unrelated commands, but the "transaction" error is common) when a second loader attempts yet another load.COPY Loading ~200 records from 4 files into a 20-million to 75-million record table takes longer than 50 seconds.We frequently see a large number of entries when running queryBatches.js for locked records.Note that a typical load is adding ~200 rows into a 75-million record database. After upgrading to a larger instance type, we've begun experiencing problems where jobs aren't able to complete before the 50-second kill command.

Data recovery after this type of failure has proven painful. Once it gets in this state data will be ignored until we run unlockBatch.js manually. About once a week a batch will get locked causing dynamo alarms to go off as the loader went into a tight loop (100 locked batch queries.).

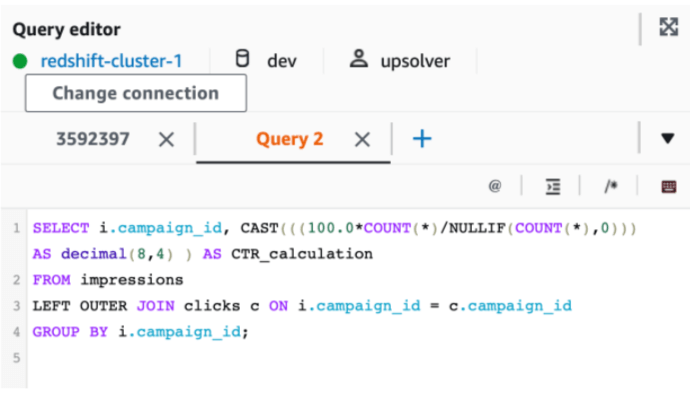

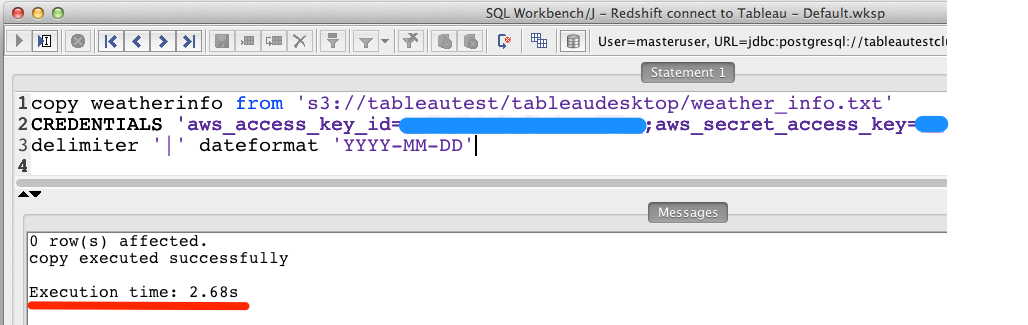

file) will contain approximately 200 records and we're inserting it into a Redshift table that is currently around 75 million rows. We're currently using the loader to copy load analytics data out of S3 into Redshift. This may be multiple issues, it may be a configuration issue but we thought we'd be succinct and start with a single issue.

0 kommentar(er)

0 kommentar(er)